Ethical Considerations in AI Development

The rapid advancements in artificial intelligence (AI) have sparked numerous ethical dilemmas that demand urgent attention from developers, policymakers, and society at large. As AI systems become increasingly integrated into various facets of everyday life, the collective moral responsibility of AI developers grows significantly. It is imperative that developers consider not only the functional capabilities of AI but also the social implications of their creations.

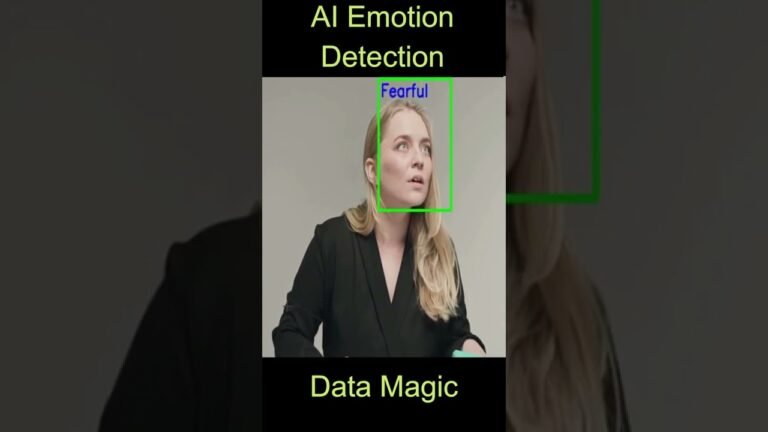

One major concern is the prevalence of biased algorithms, which can perpetuate existing inequalities and injustices within society. Instances of bias in AI systems, such as discriminatory hiring practices or inequitable law enforcement outcomes, highlight the urgent need for developers to engage in a responsible design process. For example, a well-publicized case involved a recruitment tool that favored male candidates over equally qualified female applicants due to biased training data. Such occurrences underscore the critical requirement for fairness and impartiality in AI, prompting debates on how the data is sourced and how decisions are made within algorithms.

Transparency in decision-making processes is another significant ethical consideration. AI systems often operate as “black boxes,” where their inner workings are not fully understood, even by their creators. This lack of transparency raises questions about accountability. Who is responsible when an AI system makes a decision that results in harm or discrimination? Establishing clear guidelines and frameworks for ethical AI development is vital to addressing these concerns. Initiatives such as the implementation of ethical review boards and guidelines for best practices could help ensure that AI is developed responsibly and ethically.

The path forward requires collaboration among technologists, ethicists, and stakeholders to build AI systems that respect human rights and promote equity. Creating frameworks that prioritize ethical considerations will not only shape a more just AI landscape but also enhance public trust in these technologies. As we advance towards 2025, addressing these ethical challenges will be essential in guiding the future of AI development.

Technological Hurdles and Limitations

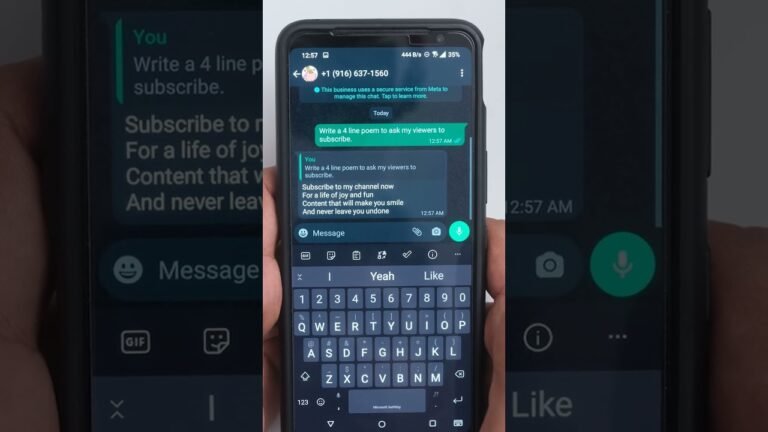

As we progress toward 2025, practitioners in the artificial intelligence (AI) domain are poised to confront several technological challenges that could shape the efficacy and integration of AI systems. One significant hurdle is the inherent limitations of current AI capabilities, which often rely on narrow, task-specific applications. While advancements in machine learning have enabled remarkable feats, such as natural language processing and image recognition, these systems still struggle with generalization and contextual understanding across multifaceted scenarios. This limitation can hinder the deployment of AI in more complex environments, where nuanced interpretation is vital.

Data privacy and security also remain pressing concerns as AI technologies continue to evolve. With the increasing reliance on vast datasets to train AI models, issues related to the protection of personal and sensitive information loom large. Compliance with regulations, such as the General Data Protection Regulation (GDPR), becomes ever more critical, and organizations must ensure that their AI systems incorporate mechanisms that protect user privacy. Failure to address these concerns could stifle innovation and erode public trust in AI technologies.

Furthermore, the deployment of complex AI systems necessitates robust infrastructure capable of supporting their computational and data storage requirements. As these systems scale, organizations will need to invest heavily in cloud services and enhanced hardware to maintain performance. This requirement poses a challenge, particularly for small to mid-sized enterprises that may lack the resources to fully harness AI’s potential.

As we look ahead, ongoing research and development may yield breakthroughs that address these limitations. However, persistent hurdles related to scalability, privacy, and performance will likely continue to challenge AI practitioners in the coming years. Addressing these issues will be essential for a smooth transition into a future where AI is seamlessly integrated into various sectors.

Regulatory and Legislative Challenges

As we look towards the future of artificial intelligence (AI) in 2025, the regulatory landscape surrounding this rapidly evolving technology poses significant challenges. Governments and international organizations are currently engaged in ongoing discussions to create laws and policies that effectively govern AI systems. The core challenge lies in ensuring that these regulations are robust enough to address immediate concerns while remaining adaptable to the swift pace of technological change.

One notable issue is the difficulty in keeping AI regulations up-to-date. The pace of AI development is unprecedented, often outstripping the ability of regulatory bodies to create rules that accurately reflect current technology. This disparity can lead to situations where policies are either outdated or inadequately defined, leaving critical gaps in oversight. For example, in the EU, efforts are underway to establish comprehensive proposals, such as the AI Act, aimed at categorizing AI applications based on their risk levels. However, the intricate nature of AI complicates the establishment of universally applicable regulations.

Furthermore, the debate over who qualifies to set these rules is contentious. Stakeholders in the AI ecosystem—ranging from tech companies and government entities to civil society organizations—each have their agendas, often clashing over the extent and nature of regulatory oversight. This has raised questions about the balance between innovation and public safety. Some regions, like Canada, have made progress by encouraging collaboration between various stakeholders to establish shared principles for AI use. Such collaborative efforts may serve as models for other regions grappling with similar challenges.

In conclusion, as AI technology continues to advance, the ability to craft effective regulations that are both comprehensive and flexible will be paramount. The regulatory framework established in 2025 will likely play a crucial role in shaping the future of AI, addressing concerns while fostering innovation in a responsible manner.

Societal Impact and Public Perception of AI

As artificial intelligence (AI) continues to advance, its societal impact remains a critical area of examination. One of the foremost concerns surrounding AI adoption is job displacement. Automation facilitated by AI technologies has the potential to streamline numerous processes across various industries, leading to increased productivity. However, this same efficiency raises fears of significant workforce reductions, particularly in sectors reliant on routine tasks. These concerns often overshadow the potential for AI to create new job opportunities and enhance existing roles, underscoring the need for a balanced view of AI’s employment prospects.

Alongside employment concerns, misconceptions about AI capabilities contribute to public apprehension. Many individuals may perceive AI as an omnipotent force with an ability to think and decide independently, leading to exaggerated fears about its decision-making power. These misconceptions are often fueled by fictional portrayals of AI in popular media, which do not accurately reflect the current technological landscape. It is vital to foster a more informed understanding of AI, emphasizing its role as a tool that complements human abilities rather than a substitute for them.

Public education plays a crucial role in shaping perceptions of AI and mitigating associated fears. Engaging communities through informative dialogues, workshops, and educational campaigns is essential to demystify AI technology. Such initiatives can help clarify its limitations, thereby promoting a more nuanced understanding. Moreover, fostering a collaborative relationship between AI developers and the public is paramount. Developers should actively seek input from diverse public voices, ensuring that technological advancements align with societal values and expectations. By working together, both AI engineers and the community can create a more favorable environment for the responsible integration of AI into daily life.